The effectiveness of language models depends on their ability to imitate the step-by-step deduction. However, this logic sequence is a resource-intensive and can be useless for simple questions that do not require extended calculations. Lack of awareness regarding the complexity of the task is one of the main challenges in these models. They often default on detailed logic for queries that can be answered directly. Such an approach increases token consumption, extends the response time, and the system increases latency and memory consumption. As a result, the language models dollo need to be equipped with a mechanism that allows them to make autonomous decisions on whether to think or whether to react briefly.

The current tools trying to solve this issue depend either manually set or prompt engineering to switch between short and long answers. Some methods use different models and root questions based on the estimate of the complexity. Nevertheless, these external routing systems often lack the power of the target model and fail to make the best decisions. Other techniques depend on stable rules instead of prompt-based signals, but this dynamic understanding, but this dynamic understanding. Despite some updates, these approaches fail to enable complete autonomous and reference-sensitive control in the same model.

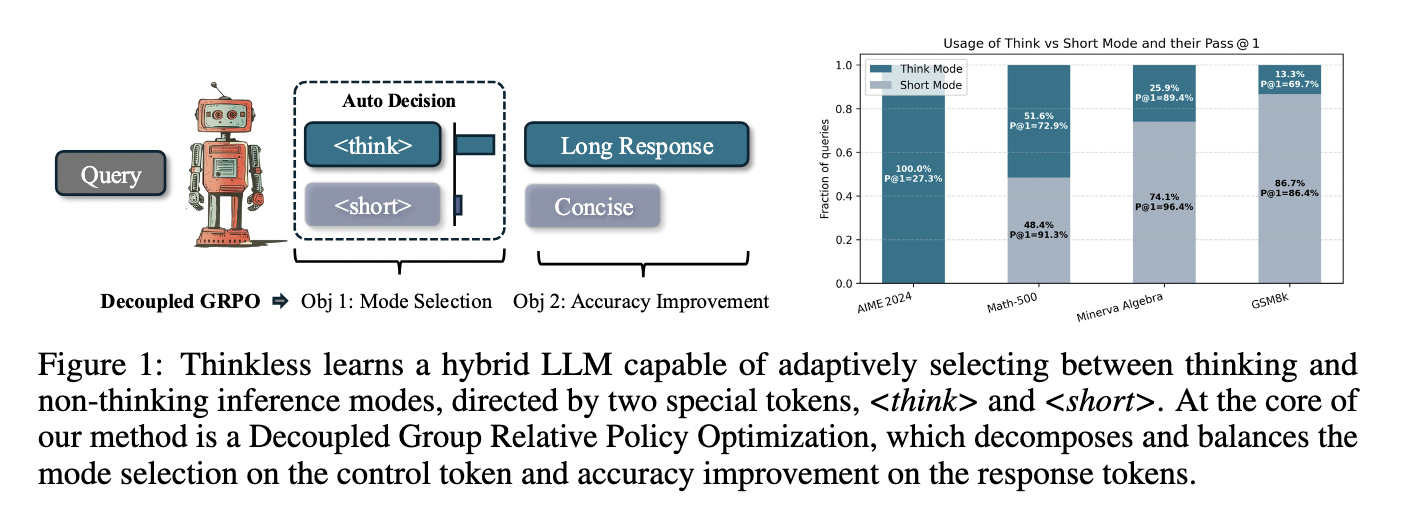

Researchers at the National University of Singapore introduced a new structure called Thinkless, which equipped a language model with the ability to dynamic decisions using short or long-form logic. This structure is built on reinforcement education and presents two special control tokens –

The method contains two stages: warm-up distillation and reinforcement education. In the distillation phase, two expert models are given thoughtless training using Dello’s output – which specializes in a short response and another in detailed logic. This phase helps the model establish the Pay FIRM link between the control token and the desired logic formation. The reinforcement finishes the model’s ability to determine which logic mode to use after the learning phase. Diggers decompose education into two different objectives: one to train a control token and the other to improve the response tokens. This approach avoids the Grad Dale imbalance in the previous Models Delo, where long answers will give more power to the learning signal, which causes a diversity of logic. Thoughtlessly ensures that both

When evaluated, the thoughtless long-form logic was significantly reduced while preserving high accuracy. On the Meenarva algebra’s benchmark, the model used

Overall, this study at the National University of Singapore presents an amazing solution to the inaccuracies of the same logic in large -language models. The task enables the complexity of the complex and adjusting their forecasting strategy accordingly, by presenting a mechanism, the best of thoughtless accuracy and efficiency. The method adjusts the depth of logic and response precision, without depending on the fixed rules, providing a data -based approach to a more intelligent language model behavior.

Check the paper and github page. All credit for this research goes to researchers of this project. Also, feel free to follow us Twitter And don’t forget to join us 95K+ ML Subredit And subscribe Our newsletter.

Nikhil is an intern consultant at MarketechPost. He is gaining a dual degree in materials in the technology of the Indian organization in Kharagpur. Nikhil AI/ML is enthusiastic that always researches application in areas such as biometrials and biomedical vigels. With a strong background in the physical expression, he is looking for new progress and chances of contributing.