The Alibaba Quain team has introduced a new addition to the Queen model family, which is designed to integrate multimodal understanding and pay generation within a single structure. Located as a powerful creative engine, Queen-VLO enables users to produce, edit, and purify high-quality visual material from text, sketches and commands, in multiple languages and step-by-step visual construction. This model jumps significantly to the multimodal AI, which applies to designers, marketers, content makers and teachers.

Integrated vision-language modeling

Queen-VLO Queen-VL, on Alibaba’s previous Vision-Language model, creates it with the image generation capabilities. The model integrates visual and textual methods in both directions-it can interpret the images and produce relevant textual descriptions or respond to visual prompts, while also producing visuals based on textual or sketch-based instructions. This bilateral flow enables seamless interaction between the methods, by ptimizing OPTIM to creative workflows.

The main features of the Queen-VLO

- Concept-to-polish Visual Generation: Queen-VLO supports producing high-resolution images from rough inputs, such as text prompts or easy sketches. The model understands abstract concepts and turns them into a polished, aesthetic pure visual. This ability is ideal for the initial stage ideology in design and branding.

- Fly Visual Edit: With natural language commands, users can repeat the images, adjust the Object Burjet placement, lighting, color themes and composition. Queen-VLO facilitates tasks such as removing the need for manual editing tools, custom photography or customizing digital ads.

- Multilingual Multimodal Understanding: The Queen-VLO is trained with the support of multiple languages, allowing users of different linguistic backgrounds to connect to the model. This makes it suitable for global deployment in industries such as e-CE Mars, publishing and education.

- Progressive visual construction: Instead of introducing complex scenes in one pass, the Queen-VLO enables progressive pay generation. Users can guide the model to step-by-step-add the elements, purify the interactions, and adjust the layout in greater proportion. This gives a natural human creativity a mirror and improves user control over the output.

Architectural and training

While the model architecture details are not specified in the public blog, the Queen-VLO probably inherited and expands the transformer-based architecture from the Queen-VL line. Enhancement focuses on fusion strategy for cross-model attention, adaptive fine-tuning pipelines, and the integration of structured presentations for better spatial and semantic grounding.

Training data includes multi-lingual image-text pairs, sketches with image ground truth and real-world product photography. This diverse corpus allows Queen-VLO to be well normalized in tasks such as composition generation, layout refinement and image a tion positive.

Target case

- Design and Marketing: The ability to convert Quain-VLO text concepts into polished visuals ideal for AD creatives, storyboards, product mockapies and promotional content.

- Education: Teachers can imagine abstract concepts (e.g., Vijay, History, History, Art). Language support increases Ibility Cessibility in multilingual classrooms.

- E-CE Mars and Retail: The SEL Neline Sellers can use the model to create product visuals, redesign shots or local designs per field.

- Social Media and Material Creation: For influencers or content manufacturers, Quain-VLO provides a quick, high-quality image generation without relying on the traditional design Software Fatware.

Main benefit

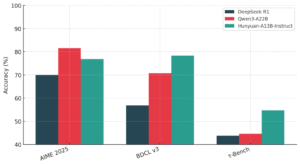

Queen-VLO is the current LMM (Large Multimodal Model) offered in the landscape of stands:

- Seamless text-to-image and image-to-text transition

- Local material generation in multiple languages

- Outputs suitable for commercial use

- Editorial and interactive generation pipeline

Its design supports repetitive response loops and precision edits, which are important for professional-grade material generation workflow.

End

Alibaba’s Queen-VLO merges the multimodal AI boundary by merging into the interactive model of the multimodal AI. Its flexibility, multilingual support, and progressive pay generation features make it a valuable tool for a wide array of content -based industries. As the demand for visual and language content conversion increases, the Queen-VLO is a scalable, creative assistant ready for global adoption.

Check Technical details and try it here. All credit for this research goes to researchers of this project. Also, feel free to follow us Twitter And don’t forget to join us 100 k+ ml subredit And subscribe Our newsletter.

Asif Razzaq is the CEO of MarketechPost Media Inc. as a visionary entrepreneur and engineer, Asif is committed to increasing the possibility of artificial intelligence for social good. Their most recent effort is the inauguration of the artificial intelligence media platform, MarktecPost, for its depth of machine learning and deep learning news for its depth of coverage .This is technically sound and easily understandable by a large audience. The platform has more than 2 million monthly views, showing its popularity among the audience.