AI organizations develop intercourse models dello for certain tasks but face data shortages during training. Traditional Federated Learning (FL) only supports the cooperative model collaboration, which requires the same architectures in all customers. However, consumers develop model architectures for their unique requirements. Moreover, effort-intensive is intellectual property in sharing locally trained models and reduces participants’ interest in joining collaboration. Heteran Federated Learning (HTFL) addresses these limits, but lacks a unified benchmark in literature to evaluate HTFL in various domains and aspects.

Categories of background and HTFL methods

Existing FL benchmarks focuses on data exemplation using homogeneous client MODELS Dello, but ignores real views that include model sexuality. Representative HTFL methods come into three major categories in view of these limitations. Methods for sharing partial dimensions such as LG-Fediji, Fedjen and Fed assume the transient classified heads for the transfer of Junowledge. Mutual distillation, such as FML, Fedkedi and FedMRL, train and share small supporting models through distillation techniques. Prototype sharing methods transfer lightweight class -wise prototypes as the global ge knowledge, collect local prototypes from customers, and collect them on servers to guide local training. However, it is unclear whether existing HTFL methods are constantly performing in different scenarios.

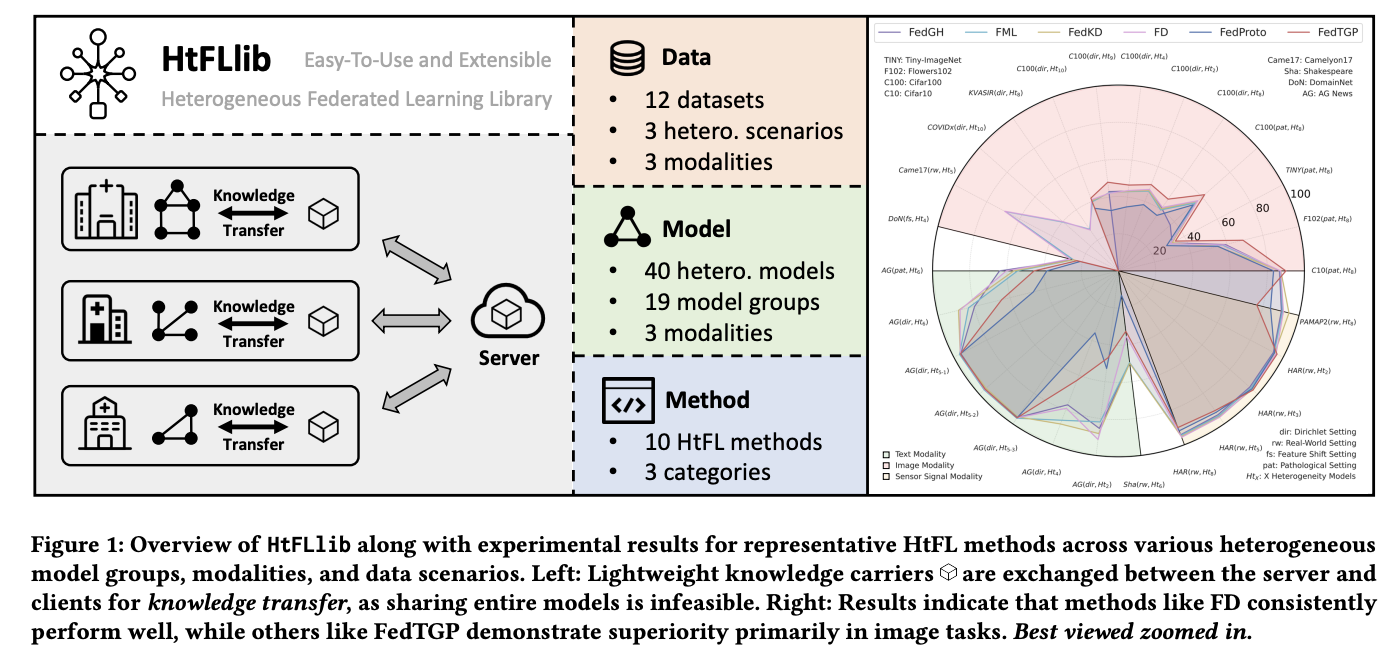

HTFLLIB is presenting: a unified benchmark

Researchers at Shanghai Jiao Tong University, Behang University, Chongking University, Tongji University, Hong Kong Polytechnic University and Belfast have proposed a simple and extensored method for integrating multiple datasets and model hatezinity synories. This method integrates:

- 12 datasets in views of different domains, methods and data interactions

- 40 model architectures from small to larger, up to three methods.

- Modulized and easy-to-accelerate HTFL codebase with the implementation of 10 representative HTFL methods.

- A systematic evaluation covering accuracy, conversion, calculation costs and communication costs.

Datasets and methods in HTFLLIB

Views of detailed data in HTFLIB are divided into three settings: label squeebs, subsetings, feature shifts and real-world with pathological and dyriclets. It integrates 12 datasets, including CIFAR 10, CIFAR 100, flowers 102, Tini-Imagenate, Quasir, Kovidex, Domenet, Camelian 17, AG News, Shakespeare, HAR, and Pamap 2. These datasets vary significantly in domain, data volume and class numbers, which demonstrate the broad and versatile nature of HTFLIB. Moreover, the main focus of researchers is on image data, especially label skin setting, because image functions are the most commonly used functions in different fields. HTFL methods are evaluated in image, text, and sensor signal functions to evaluate their relevant power and weaknesses.

Analysis of operation: Method of image

For image data, most HTFL methods show a decrease in accuracy as the model sexuality increases. FedMRL shows the best strength through a combination of its supporting global and local models. The FedTGP maintains excellence in different settings due to its adaptive prototype refinement capacity, which corresponds to partial dimension sharing methods when introducing heterogeneous classification. Medical dataset experiments with Black-ED-ED pre-trained sexual models Dells show that HTFL increases the quality of the model than pre-trained models and receives more improvements than supporting models such as FML. For text data, the advantages of FedMRL in label squap settings decrease in real-world settings, while FedProto and FedTGP image weakens relatively compared to the functions.

End

In conclusion, researchers introduced HTFLib, a structure that considers the crucial distance in HTFL benchmarking by providing integrated evaluation standards in various domains and views. HTFLib’s modular design and extensible architecture offers detailed benchmarks for both research and practical applications in HTFL. Moreover, its ability to support the interior models in collaborative education, opens the way to future research to use complex pre-educated large models, black-b-systems eaters, and diverse architects in various functions and methods.

Check Paper And Githb page. All credit for this research goes to researchers of this project. Also, feel free to follow us Twitter And don’t forget to join us 100 k+ ml subredit And subscribe Our newsletter.

Sajad Ansari is the last year’s undergraduate from IIT Kharagpur. As a technical enthusiast, it considers AI’s practical applications by focusing on the influence of AI techniques and their real-world effects. Its purpose is to clearly and accessible complex AI concepts.