Following the amazing projection of our family, Gemma 3 and Gemma 3 QAT, capable of operating on a single cloud or desk. Gemma 3 delivers powerful capabilities for developers, and now we are expanding that vision to a very capable, real-time AI that works directly on the devices you use every day-your phones, tablets and laptops.

We have an engineer of a new, cutting edge architecture, to support various applications, including the VE-Device AI’s upcoming pay generation and advancing Gemini Nano capabilities. The next pay-Generation Foundation was created in collaboration with Ga Close with Mobile Hardware leaders such as Qualcomm Technologies, MediaTech and Samsung’s system LSI business, and Lightning-Fast, Multimodal AI to enable personal and private experiences directly on your device.

Gemma 3N is our first open model built on this groundbreaking, shared architecture, which allows developers to start experimenting with this technology today in the initial preview. The same advanced architecture also empowers Gemini Nano’s upcoming pay -generation, which brings these capabilities to a wide range of Google applications and our -N -device ecosystem features, and will be available later this year. Gemma 3n enables you to start building at this base that will come on the main platforms like Android and Chrome.

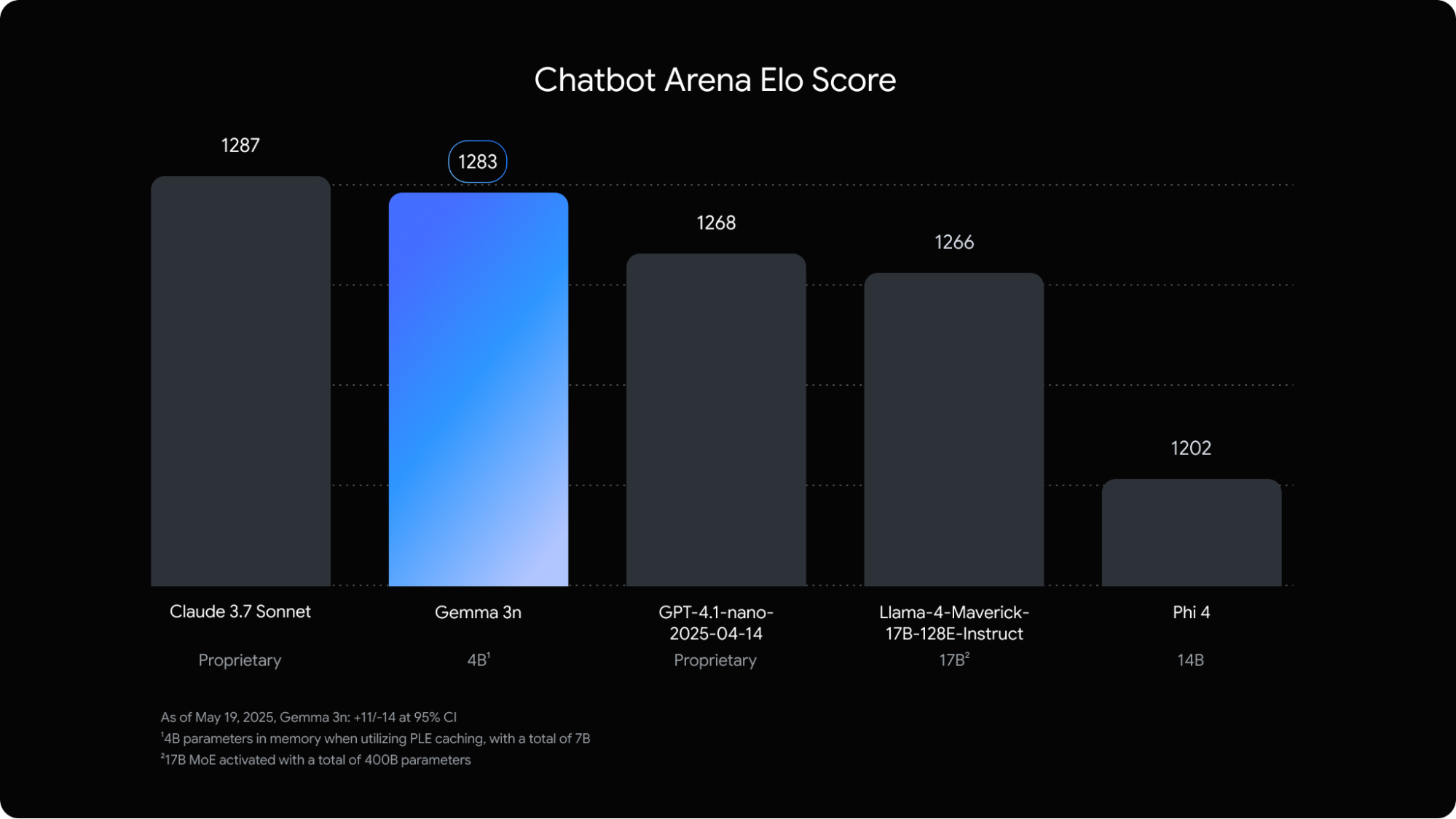

This chart chatbot replaces AI models by ELO scores of array; High scores (top numbers) indicate more user choice. The Gemma 3N ranks in both popular owned and open models.

The Google Deepmind, named Gemma 3N, Pero-Layer Embedings (PLE), offers the benefit of which significantly reduces RAM consumption. While the raw dimension is 5B and 8B, this innovation allows you to operate large models from mobile devices or clouds to live-stream with comparable memory overhead with 2B and 4B model, meaning models can only work with a dynamic memory footprint of 2 GB and 3GB. Learn more in our documents.

By exploring the Gemma 3N, developers can get an early preview of the main capabilities of the open model and mobile-first architectural innovations that will be available on Android and Chrome with Gemini Nano.

In this post, we will explore the new capabilities of Gemma 3N, our approach to responsible development, and how you can successon today.

The main capabilities of Gemma 3N

Engineering for fast-running fast, low-foot impression, Gemma 3n delivers:

- Ptimaize ized on device performance and efficiency: Gemma begins to respond to about 1.5x faster on mobile with significantly good quality (compared to Gemma 3 4B) and per layer embedings, KVC sharing and advanced activation quantification per layer on mobile.

- Many -in -1 Flexibility: A model with 4B Active Memory Footprint, which originally includes nasted state -of -the -art 2B Active Memory Footprint Submodel (thanks to Matformer training). This provides relief for dynamic influence and quality trading on the fly without hosting different models. We introduce a mixed capability in Gemma 3N to create dynamic submodels from the 4B model that can best fit your specific use case – and related quality/latency trades. Keep in touch for more on this research in our next technical report.

- Privacy-First and Offline Falline Ready: Local execution enables features that work user’s privacy and reliably, even without internet connection.

- Extended multimodal understanding with Audio deio: Gemma 3N Audio can understand and process deo, text and images and significantly enhance video understanding. Its Audio deo capabilities enable the model to perform high-quality automatic speech recognition (transcription) and translation (speech for translation). In addition, the model accepts interlaved inputs in models, enabling an understanding of complex multimodal interactions. (Public implementation is coming soon)

- Improved multilingual abilities: Especially in Japanese, German, Korean, Spanish and French, multi -lingual operations improved. WMT reflects strong performance on a multilingual benchmark like 50.1% on 24 ++ (CHRF).

The MMLU Performance vs Model Size of this chart show is the Mix-N-Match of Gemma 3N.

Oking the on-the-the-go experiences

Gemma 3n will empower the new wave of intelligent, go-the-go-go-app by enabling developers:

- Make live, interactive experiences It understands and responds to real-time visual and audible signals from the user’s environment.

2. Understanding the understanding power And the combined Audio processes privately on all devices referenced using Dio, image, video and text inputs.

3. Develop advanced Audio deo -centered applicationsIncluding real-time speech transcription, translation and rich sound-based interactions.

Here are a overview and type of experiences you create:

Building responsibly, with

Our commitment to responsible AI development is supreme. Gemma 3N, like all Gemma models, made a fine-tuning configuration with strict safety evaluation, data governance and our safety policies. We carefully contact open models with risk assessment, constantly improving our practices as the AI landscape develops.

Start: Today Gemma 3N previews

We are excited to enter your hand by Jemma 3n by previewing today:

Early entry (now available):

- Cloud-based research with Google AI Studio: Try Gemma 3N in your browser directly at Google AI Studio – no setup required. Immediately explore its text input capabilities.

- Device Development with Google AI Age: For developers who want to integrate Gemma 3N locally, Google provides AI Edge Tools and Libraries. You can start today with the capabilities of text and image understanding/pay generation.

Gemma 3N Cutting Edge marks the next step in the democratization of efficient AI. Starting with today’s preview, we are excited to see that we will be available in this technology gradually available.

Explore this announcement and all Google I/O 2025 updates on IOGogle from May 22.