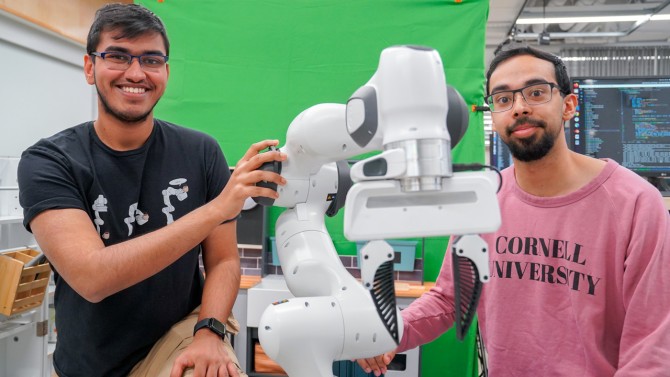

Kushal Kadia (left) and Prithvi Dan (right) are members of the development team behind the poem, a system that allows robots to learn tasks by watching video in a single-way video.

By Louise Deptro

Cornell researchers have developed a new robotic framework powered by artificial intelligence-called poetry (recovery of hybrid imitation under non-matching execution)-Allows robots to learn tasks by watching videos. Researchers said that poetry can accelerate the development and deployment of robotic systems, reducing the time needed to train them, significantly reduced energy and money.

Kushal Kadia, the main author of the corresponding paper in the field of computer vigil and poetry, said, “It collects a lot of data on the robot that is annoying about working with robots.” “Humans do not work. We see other people as an inspiration.”

Institute Electric Fi Electrical and Electronics Engineers International Conference on Robotics and Auto Tomation in Atlanta will introduce a shot tsp imitation in May in May, under the match.

Home robot assistants are still a long way – training robots to cope with the potential views they can experience in the real world is a very difficult task. To reach the pace of robots, researchers like cadia are training them with the amount of videos-human performance of various tasks in the lab setting. Hopefully with this approach, a branch of machine learning, called “Imitation Learning”, is that the robots will quickly learn the order of tasks and adapt to the real-world environment.

“Our task is to translate French to English – we are translating any work into a robot,” said Senior author of Computer Science at Cornell NS Bowers College of Computing and Information Science.

This translation function still faces a widespread challenge, however: humans move very fluidly to the robot to make and replicate the track, and the robots need to be trained with video. Next, video display – say, napkin or stacking dinner plates – must be slowly and flawless, as there is no matching the actions between the video and the robot histor spells doom for robot learning, researchers said.

“If a human being moves in such a way that the robot is different from how it is, the method is different immediately,” Chaudhary said. “Our thinking was, ‘Can we find a principle way to deal with this match between humans and robots?’

Poetry is the answer to the team – a scalable approach that makes robots less beautiful and more adaptable. It trains the robotic system to store previous examples in its memory bank and connect points when it looks only once by drawing on the videos they have seen. For example, a poem fitted a video of a mug brought from a robot counter and placing it in a nearby sink and will comb the bank of his videos and inspires the same actions-to catch the cup and reduce the utensils.

Researchers said that poetry paves the way to learn multiple-step sequences to robots while significantly reducing the amount of robot data needed for training. They claim that poetry needs only 30 minutes of robot data; In the lab setting, the trained robots using the system increased the work success by more than 50% compared to the previous methods.

“This work is departing from how robots are programmed today. The status of programming robots is thousands of hours tele-operation to teach a robot. It is only impossible,” Chaudhary said. “With poetry, we are moving away from it and learning to train robots more scalable.”

This research is done by Google, OpenAI, U.S. Office was supported by the Fees of Naval Research and the National Science Foundation.

Read the full work

Under the matching execution of a shot tsp, Kushal Kadia, Prithvi Dan, Angela Chao, Maximus Adrian Paes, Sanjiban Chowdhury.

Tags Gs: ICRA, ICRA 2025

Cornell University